Creating Kinect Controls for Angry Birds

Before I begin let me give you a little background. About a month ago we decided to try and create NUI (Natural User Interface) or Kinect controls for Angry Birds. Partially as a learning experience but also as proof of concept that games like Angry Birds (ex. applications that lend themselves to touchscreen devices) can work in a gestural / depth camera environment better than has been demonstrated. In the end we tried many different input methods that would be possible with a Kinect and I wanted to catalog that experience.

I’ll start with the worst input paradigm we tried go from there.

#5 The Faux Mouse

**The Idea

**First we just tried mapping the hands to the screen as if they were each a mouse or finger. Each would control a hand like cursor and move it around the screen. Clicks could either be performed by grasping the hands or pushing towards the screen. The game would be played normally – except you’d click on everything using your hand.

**The Reality

**This input method was by far the worst. Your hands are simply not mice, they get tired much faster and are not as dexterous. Even with heavy filtering and snap-able buttons, the interaction is just too nuanced and motor skill intensive an operation to click on a button or object on the screen using your hands as mice. The time it took us to play a level vs. someone playing it on the iPhone was an order of magnitude or longer a task.

When pushing towards the screen a lot of care had to be taken to deal with user drift. When a user pushes towards the screen, they may in fact be pushing towards many possible locations. They may drift towards the TV, or the camera if that’s their focus. They may also consciously attempt to maintain a straight hand as they push forward. No matter the case – a user will drift even off their intended target.

When we tried grasp detection using computer vision – again we saw drift. When a user opens and closes their hand the volume of the hand is changed from the point of view of the camera. The result ends up displacing the center of mass of the hand causing it to shift downward when the hand is closed. There are solutions to this problem such as eliminating the fingers from the hand volume calculation but this is a difficult problem given the quality of the data.

In the end the drift issues prevented us from playing as well as we could with the real mouse or on the iPhone and since Angry Birds is both a game of quick victory and defeat as well as a game of precision. Having both large problems with precision and game play progression speed – we ditched this idea.

#4 Voice

**The Idea

**This one is exactly what you might expect – firing the bird using your voice. Either by saying “Fire!” or maybe… “Ca Cah!”.

**The Reality

**We really were not sure how this method would perform. The voice portion was layered onto the mouse control system. Essentially you would click on the slingshot – move your hand to aim and exclaim viciously to let loose the birds of war. The hope was that it would alleviate the drift problems, which it did. Without having to push your hand forward to fire the drift was eliminated from firing.

However there were other problems, there was delay in the speech recognition and often outright failure if you didn’t say the words just right. We tried several tricks, like using multiple words to identify “Fire”. One good way to generate that list is to take the top 10 words it mistakened someone saying fire for and add those to the dictionary as triggers for firing the bird.

Moving Away From Faux Mouse

After the failures with both #4 and #5 we went back to the drawing board. We needed to get away from the mouse or touch device centered thinking. The worlds are simply too different to treat it the same. So we prototyped 3 other solutions that ended up all being better than the mouse style interface.

The ideas all stem from an understanding that when you go to port an application from a touch enabled world you need to think about 2 things primarily,

- Context

- Automation

Context – How can you reduce and scope the options to the user so that a broad array of options can be presented to the user – but with only a few usable at any given time with a small vocabulary of motions.

An example from Angry Birds is all the options the user can perform in the game:

- Fire Bird

- Activate Bird Special Attack

- Restart

- Pan

- Zoom

- Return to the Menu

We needed to find a way to contextualize these options when moving away from a mouse driven style interface.

Automation – Find the items in the application that everyone does without thinking about it and automate them. If they aren’t relevant to game play find a way to make them irrelevant in a NUI application.

An example from Angry Birds is activating the slingshot. You probably don’t think about it when playing the game but to fire a bird you must first place your finger on the slingshot before drawing back the bird to fire it. While this is unbelievably trivial to the point of not thinking about it on an iPhone, it’s a huge pain in an environment where you have to get a virtual hand cursor over it, even more so if you then need to push forward to activate it.

So we needed to find a way to automate clicking the slingshot. That way instead of clicking the slingshot explicitly it would be implicitly activated by performing some gesture to begin the act of firing a bird, that would be disconnected from the onscreen location of the hand relative to the slingshot on the screen.

#3 Arclight

**The Idea

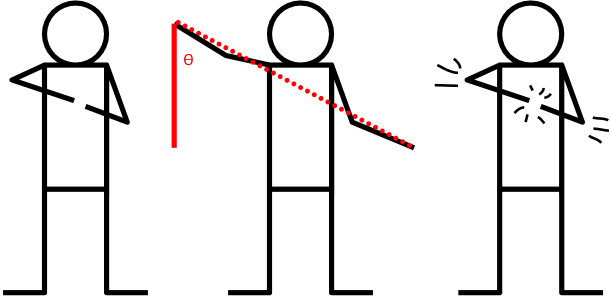

**You would draw back the slingshot by bringing your hands together. Then bring your hands apart and rotate them around your center of mass to change the firing angle on the slingshot. Then once you’ve settled on the firing angle, bring your hands together fast to trigger the fire.

**The Reality

**The problem with this kind of activation of the slingshot was the drift when the hands come together. This can be partially accounted for but it’s heuristically based and can be erroneous. Additionally activating the bird’s special power was difficult. You would have to choose a different kind of interaction to activate the special power which would complicate the process.

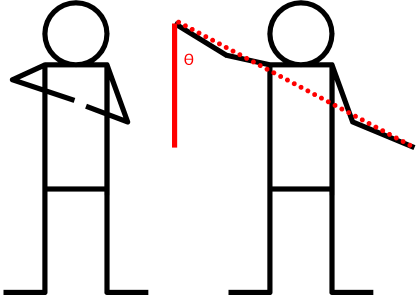

#2 Stretch and Snap

The Idea

This idea grew out of the Arclight firing system. To attempt to solve the problem of drift, have the slingshot fire as soon as the arms reach a certain distance apart.

**The Reality

**With this firing system you still have the problem of determining how to activate the bird special power. You also introduce a new problem – all birds are fired at maximum drawback. You also need to make sure to provide the user with feedback so that they know how close the user is to the snap, some kind of progress firing bar.

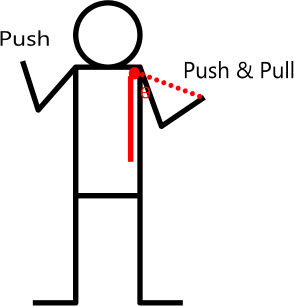

#1 Axis Separated

The Idea

For this idea I separated the functions of the hands into distinct responsibilities. Your left hand activates the slingshot by pushing forward (doesn’t matter where). After a threshold is crossed the slingshot is activated, from then on an angle is calculated between the shoulder location and the left hand’s location relative to it, to produce the slingshot firing angle. To fire the bird the right hand is pushed forward and pulled back, this sends the bird flying. To activate the bird’s special power you again push the right hand forward.

**The Reality

**This method ended up working perfectly. It doesn’t result in any drift when firing is activated. It is also easy to perform because all the motions can be performed with your arms down by your sides, reducing exhaustion in long game play sessions.

Lessons Learned

You hear it all the time but it is critically important to prototype ideas when it comes to creating Kinect controls. They simply don’t work as well as you would like in reality as they do in your head. Here’s a demonstration video of the end result,